Nvidia's accelerated computing is much more than just AI accelerator hardware, but even in chip design, it's extremely hard for competitors to catch up.

The reason is Nvidia designs its chips using the aid of AI due to the complexity and immense scale of the task. The chips they construct are among the largest and most complex in the world, making it nearly impossible to build such enormous semiconductor chips without AI assistance.

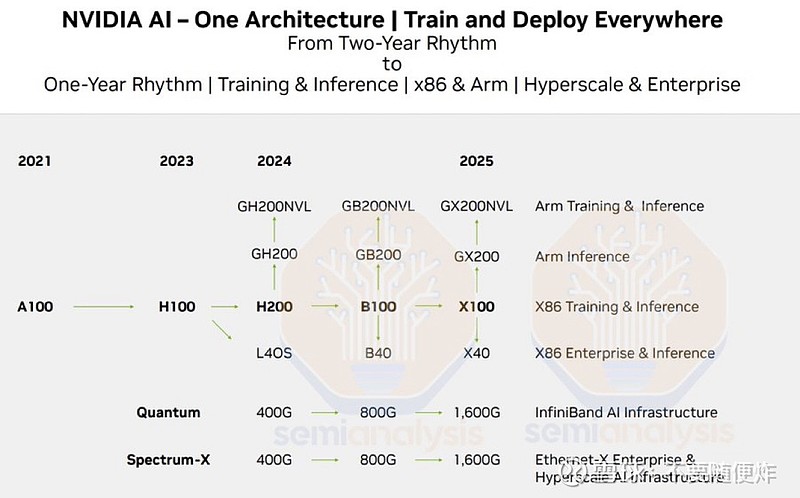

Nvidia also moved to a new yearly release cadence from its previous strategy of launching AI chips every two years.

🔹 Nvidia GPU Architectures with CUDA

Ampere: A100, 7nm (May 14, 2020)

Hopper: H100, 5nm (Sep 20, 2022), H200, 5nm (Q2-2024)

📊 Production: 550K (2023), 1.5-2M (2024)

Blackwell: B100, 3nm (2H 2024), 1st chiplet design

🔹 Microsoft Maia 100:

Data center rollout: Early 2024

Tailored for Copilot/Azure OpenAI Service

Development: ~3 years, OpenAI input

🔹 Google TPU (Tensor Processing Unit):

Internal use: 2015

Third-party use via Cloud: 2018

🔹 Amazon AWS:

Inferentia: Released 2019

Trainium: First appearance 2021

🔹 Tesla Dojo:

Mentioned: April 2019

Announced: AI Day, August 19, 2021

🔹 META MTIA:

Meta Training and Inference Accelerator

Announced: May 2023

🔹 AMD Instinct MI300 with ROCm Software Stack:

Production start: End of 2023

🔹 Intel Gaudi3 with SynapseAI Software Suite:

Scheduled launch: 2024